The idea was to create AI intentions – code suggestions based on what you’ve just written. They cover a wide range of situations from generating code to warnings and optimization suggestions. The main goal was to develop NLP model and rank the list of predicted intentions based on notebook content.

I managed this project and designed the architecture to build a complete working prototype.

Dataset

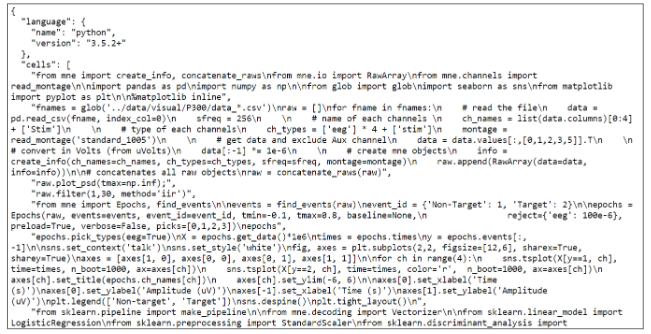

Firstly, 6 mln jupyter notebooks had been parsed. We ended up with 3.4 mln notebooks after filtering out irrelevant ones (empty, Python 2).

After EDA, we got some insights:

- 2.6% of notebooks don't use Python – there're also R, Julia and Scala kernels along with an insignificant number of more exotic ones (C++, Haskell, OCaml, F#)

- In the last 2 years, the number of Python 3 notebooks grew by 660%

- > 300.000 notebooks with sensitive data

Model implementation

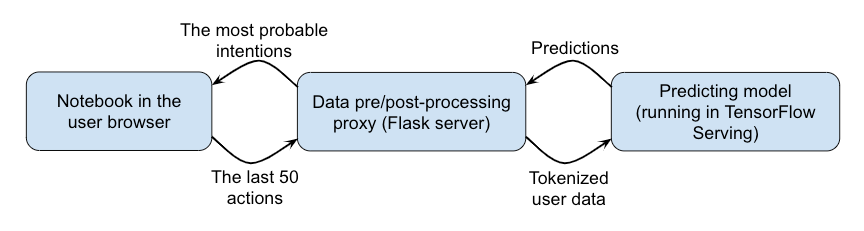

AI Intentions module implemented as two standalone services for processing data from/to user and making predictions (CPU- and GPU-intensive, scaled independently)

The predicting model: each user-side event is mapped to the learned embedding vector, the result is passed to a deep LSTM network; finally, dense bottleneck maps LSTM output features to the probabilities of the next intention

Tech stack

- TensorFlow 2.0 for training models

- TensorFlow Serving

- Flask (via uwsgi-nginx-flask) for pre/post processing data

Architecture

- Input – events generated by user are mapped to learned embeddings, which are passed to the self-attention layer

- Output was passed to the deep LSTM (each layer followed by LayerNorm)

- LSTM outputs are passed to the dense bottleneck layer, regularized with low-rate dropout

Results

AI Intentions models obtain up to:

- 60%@top-1 accuracy

- 92%@top-5 accuracy

Teammates

- Adam Hood, Software Developer

- Vladimir Sotnikov, Data Scientist